Difference between revisions of "TB/TB-2007-02-HACLUSTER"

Aussieowner (Talk | contribs) |

|||

| (43 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | ''' | + | [[TB]] -> '''TB-2007-02-HACLUSTER''' |

| + | == Overview == | ||

| + | The most common high-availability setup is dual nodes in a clustered configuration, each running an active instance of Scalix and setup for mutual failover. With both nodes up and running, instance "mail1" runs on Node A while Instance "mail2" runs on Node B. | ||

| + | In case of failure of either node, the active instance on that node fails over to the other node so that now the one remaining node is running both instances. From a user perspective, this happens in a transparent way because each instance is associated with a virtual hostname (instance hostname or service hostname) and IP address through which all user interaction takes place. | ||

| + | [[Image:HowTos-HA-ScalixCluster.png]] | ||

| + | The Scalix message store and database is kept on external shared storage. Physically, the storage can be accessed by both nodes at all times. However, each instance has it's separate message store, so the cluster software manages access to the storage in such a way that each message store is only accessed by the node that is running the associated instance. | ||

| + | Various shared storage technologies such as multi-initiator SCSI or a SAN based on iSCSI or FibreChannel can be used. Scalix does not support the use of NFS for shared storage. | ||

| + | Scalix Enterprise Edition software provides multi-instance and virtual hostname support. The actual cluster monitoring and failover as well as access to the storage and network address and hostname management is provided by clustering software. We have tested HA-Scalix to run with RedHat Cluster Suite, however it should be possible to create similar configurations with most other cluster products. | ||

| + | == Prerequisites == | ||

| + | === Software Versions === | ||

| + | * This document assumes you are running Scalix 11.0.4 or later on SuSE Linux Enterprise Server 10 or on RedHat Enterprise Linux 4 | ||

| + | === Network Address and Hostname Layout === | ||

| + | Each of the nodes has one static IP address for itself. This is only used for admin purposes, it stays with the node, never fails over and is not used to access Scalix services. | ||

| + | [[Image:HowTos-HA-ScalixCluster-Network.png]] | ||

| + | In the example, we use the following IP addresses and hostnames: | ||

| − | + | Node A Node B | |

| − | + | nodea.scalix.demo nodeb.scalix.demo | |

| + | 192.168.100.11 192.168.100.12 | ||

| − | + | Each of the instances also has an associated IP address. This is used by end user access. It moves between the nodes with the instance. In the example, we use the following IP addresses and hostnames: | |

| − | + | Instance 1 Instance 2 | |

| + | mail1.scalix.demo mail2.scalix.demo | ||

| + | 192.168.100.21 192.168.100.22 | ||

| − | + | All IP addresses and hostname should be registered in DNS so that they can be resolved forward and reverse. Also, on the cluster nodes, the IP address mappings should be recorded in the /etc/hosts config file so that the cluster does not depend on DNS availability. | |

| − | + | ||

| − | + | ||

| − | Each | + | === Storage Configuration === |

| + | Each of the instances needs it's own dedicated storage area. Scalix recommends the use of LVM as this enables filesystem resizing as well as the ability to snapshot a filesystem for backup purposes. From an LVM point of view, each instance should have it's own LVM Volume Group (VG) as this VG can only be activated and accessed by one of the hosts at a time. For the example configuration, we will use a simple disk configuration as follows: | ||

| − | + | [[Image:ScalixCluster-SharedDisk.png]] | |

| − | + | [[Image:ScalixCluster-LVM.png]] | |

| − | + | ||

| − | + | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | We take two disks or LUNs on the shared storage for each instance, delete any partitions currently on the disks, then create a volume group and a logical volume for the message store and Scalix data area. Also you will need to initialize the filesystem on the logical volume: | |

| − | + | * '''On Node A:''' | |

| + | [root@nodea ~]# dd if=/dev/zero of=/dev/sdd bs=512 count=1 | ||

| + | 1+0 records in | ||

| + | 1+0 records out | ||

| + | [root@nodea ~]# pvcreate /dev/sdd | ||

| + | Physical volume "/dev/sdd" successfully created | ||

| + | [root@nodea ~]# vgcreate vgmail1 /dev/sdd | ||

| + | Volume group "vgmail1" successfully created | ||

| + | [root@nodea ~]# lvcreate -L 140G -n lvmail1 vgmail1 | ||

| + | Logical volume "lvmail1" created | ||

| + | [root@nodea ~]# vgscan | ||

| + | Reading all physical volumes. This may take a while... | ||

| + | Found volume group "vgmail1" using metadata type lvm2 | ||

| + | Found volume group "VolGroup00" using metadata type lvm2 | ||

| + | [root@nodea ~]# mkfs.ext3 /dev/vgmail1/lvmail1 | ||

| + | mke2fs 1.35 (28-Feb-2004) | ||

| + | ... | ||

| + | Writing superblocks and filesystem accounting information: done | ||

| + | [root@nodea ~]# vgchange -a y vgmail1 | ||

| + | 1 logical volume(s) in volume group "vgmail1" now active | ||

| + | [root@nodea ~]# vgchange -c y vgmail1 | ||

| + | Volume group "vgmail1" successfully changed | ||

| − | + | * '''On Node B:''' | |

| + | [root@nodeb ~]# dd if=/dev/zero of=/dev/sde bs=512 count=1 | ||

| + | 1+0 records in | ||

| + | 1+0 records out | ||

| + | [root@nodeb ~]# pvcreate /dev/sde | ||

| + | Physical volume "/dev/sde" successfully created | ||

| + | [root@nodeb ~]# vgcreate vgmail2 /dev/sde | ||

| + | Volume group "vgmail2" successfully created | ||

| + | [root@nodeb ~]# lvcreate -L 140G -n lvmail2 vgmail2 | ||

| + | Logical volume "lvmail2" created | ||

| + | [root@nodea ~]# vgscan | ||

| + | Reading all physical volumes. This may take a while... | ||

| + | Found volume group "vgmail2" using metadata type lvm2 | ||

| + | Found volume group "VolGroup00" using metadata type lvm2 | ||

| + | [root@nodeb ~]# mkfs.ext3 /dev/vgmail2/lvmail2 | ||

| + | mke2fs 1.35 (28-Feb-2004) | ||

| + | ... | ||

| + | Writing superblocks and filesystem accounting information: done | ||

| + | [root@nodea ~]# vgchange -a y vgmail1 | ||

| + | 1 logical volume(s) in volume group "vgmail1" now active | ||

| + | [root@nodea ~]# vgchange -a y vgmail2 | ||

| + | 1 logical volume(s) in volume group "vgmail2" now active | ||

| + | [root@nodea ~]# vgchange -c y vgmail1 | ||

| + | Volume group "vgmail1" successfully changed | ||

| + | [root@nodea ~]# vgchange -c y vgmail2 | ||

| + | Volume group "vgmail2" successfully changed | ||

| − | + | * '''On Node A:''' | |

| + | [root@nodea ~]# vgchange -a y vgmail2 | ||

| + | 1 logical volume(s) in volume group "vgmail2" now active | ||

| + | [root@nodea ~]# vgchange -c y vgmail2 | ||

| + | Volume group "vgmail2" successfully changed | ||

| − | |||

| − | + | Next, you need to create the mountpoints. For this, you need to determine your instance's root directory. The pathname for this will be | |

| − | + | /var/opt/scalix/az | |

| − | + | where '''az''' is the first and the last letter of your instance name, e.g. if your instances are named mail1 and mail2, your mountpoints will be | |

| − | + | /var/opt/scalix/m1 | |

| − | + | and | |

| + | /var/opt/scalix/m2 | ||

| + | respectively. | ||

| − | + | Create those mountpoints on both nodes: | |

| − | + | * '''On Node A:''' | |

| − | + | [root@nodea init.d]# mkdir -p /var/opt/scalix/m1 | |

| − | + | [root@nodea init.d]# mkdir -p /var/opt/scalix/m2 | |

| − | + | * '''On Node B:''' | |

| − | + | [root@nodeb init.d]# mkdir -p /var/opt/scalix/m1 | |

| + | [root@nodeb init.d]# mkdir -p /var/opt/scalix/m2 | ||

| − | + | == Cluster Software Setup == | |

| − | + | === RedHat Cluster Suite (RHCS) === | |

| + | ==== Installation ==== | ||

| + | Refer to RedHat documentation for Hardware Prerequisites and basic cluster software installation. Watch out for the following items: | ||

| + | * Install the cluster software on both nodes. | ||

| + | * Run system-config-cluster on the first node, after performing the basic configuration, copy the cluster.conf file to the other node (as described in the cluster documentation). | ||

| + | * You can use the default Distributed Lock Manager (DLM). You don't need the Global Lock Manager. | ||

| + | * If you have more than one cluster on the same subnet, make sure to modify the default cluster name "alpha_cluster". This can be found in the cluster.conf file. | ||

| + | ==== Creating the Scalix service ==== | ||

| + | The service that represents the Scalix instance to the cluster should be created before starting the Scalix installation. This will provide the virtual IP address and shared storage mountpoint so that Scalix can be installed using this. To create the Scalix service, follow these steps: | ||

| − | + | 1. Make sure the cluster software is started on both nodes: | |

| − | + | service ccds start; service cman start; service rgmanager start; service fenced start | |

| − | + | 2. Using system-config-cluster, add the services for both mail1 and mail2: | |

| + | * Use the instance name,"mail1" as the service name | ||

| + | * Uncheck "Autostart this Service" and set recovery policy to "Disable" | ||

| + | * Create a new resource for the service to represent the virtual IP address defined for the instance | ||

| + | * Create a new resource for the service to represent the filesystem and mountpoint: | ||

| + | Name: mail1fs | ||

| + | File System Type: ext3 | ||

| + | Mountpoint: /var/opt/scalix/m1 | ||

| + | Device: /dev/vgmail1/lvmail1 | ||

| + | Options: | ||

| + | File System ID: | ||

| + | Force Unmount: Checked | ||

| + | Reboot host node: Checked | ||

| + | Check file system: Checked | ||

| − | + | 3. Bring up the services on the respective primary node: | |

| − | + | clusvcadm -e mail1 -m nodea.scalixdemo.com | |

| − | + | clusvcadm -e mail2 -m nodeb.scalixdemo.com | |

| − | + | ||

| − | + | ||

| − | + | 4. At this point, it's good to check if the IP address and filesystem resources are available. | |

| − | + | * '''On Node A:''' | |

| − | + | [root@nodea ~]# clustat | |

| − | + | Member Status: Quorate | |

| − | + | ||

| − | + | Member Name Status | |

| − | + | ------ ---- ------ | |

| + | nodea.scalixdemo.com Online, Local, rgmanager | ||

| + | nodeb.scalixdemo.com Online, rgmanager | ||

| − | + | Service Name Owner (Last) State | |

| − | + | ------- ---- ----- ------ ----- | |

| + | mail1 (nodea.scalixdemo.com) disabled | ||

| + | mail2 (nodeb.scalixdemo.com) disabled | ||

| + | |||

| + | [root@nodea ~]# mount | ||

| + | ... | ||

| + | /dev/mapper/vgmail1-lvmail1 on /var/opt/scalix/m1 type ext3 (rw) | ||

| + | |||

| + | [root@nodea ~]# ip addr show | ||

| + | ... | ||

| + | 2: eth0: <BROADCAST,MULTICAST,UP> mtu 1500 qdisc pfifo_fast qlen 1000 | ||

| + | link/ether 00:06:5b:04:f4:25 brd ff:ff:ff:ff:ff:ff | ||

| + | inet 169.254.0.51/24 brd 169.254.0.255 scope global eth0 | ||

| + | inet 169.254.0.61/32 scope global eth0 | ||

| + | inet6 fe80::206:5bff:fe04:f425/64 scope link | ||

| + | valid_lft forever preferred_lft forever | ||

| − | + | == Scalix Setup == | |

| − | + | === Installation === | |

| + | You can now install Scalix. Follow the steps documented in the Scalix Installation Guide, with the follwing change: | ||

| + | * When starting the installer, specify both the instance name and the fully-qualified hostname on the command line: | ||

| − | + | * '''On Node A:''' | |

| − | + | [root@nodea ~]# ./scalix-installer --instance=mail1 --hostname=mail1.scalixdemo.com | |

| + | * Select 'Typical install' | ||

| − | + | * '''On Node B:''' | |

| − | + | [root@nodeb ~]# ./scalix-installer --instance=mail2 --hostname=mail2.scalixdemo.com | |

| − | + | * Select 'Typical install' | |

| − | + | ||

| − | + | ||

| − | + | * When asked for the 'Secure Communications' password, make sure to use the '''same''' password on both nodes. | |

| − | + | === Post-Install Tasks === | |

| + | ==== Setting up Scalix Management Services ==== | ||

| + | One of the instances needs to be nominated as the Admin instance ("Ubermanager"). The other instances will be managed through this. In this example, we assume that "mail1" will be the admin instance. For the instances not running the admin server, follow these steps: | ||

| + | * De-register the Management Console and Server from the node: | ||

| + | [root@nodeb ~]# /opt/scalix-tomcat/bin/sxtomcat-webapps --del mail2 caa sac | ||

| + | * Reconfigure the Management Agent to report to the Admin instance: | ||

| + | [root@nodeb ~]# ./scalix-installer --instance=mail2 --hostname=mail2.scalixdemo.com | ||

| + | * Select "Reconfigure Scalix Components" | ||

| + | * Select "Scalix Management Agent" | ||

| + | * Enter the fully-qualified virtual hostname of the Admin instance when asked for the host where Management Services are installed. | ||

| + | * Re-enter the 'Secure Communitcations' password. Make sure you use the same value as above. | ||

| + | * Don't opt to Create Admin Groups | ||

| + | ==== Setting the Tomcat shutdown port ==== | ||

| + | Each instance across the cluster needs to have a cluster-wide unique Tomcat shutdown port number. By default, all instances are setup to use 8005. On all but one instance, this has to be modified. Use the following steps: | ||

| + | * Set a unique shutdown port number with the following command: | ||

| + | [root@nodeb ~]# /opt/scalix-tomcat/bin/sxtomcat-modify-instance -p 8006 mail2 | ||

| + | * Restart Tomcat | ||

| + | [root@nodeb ~]# service scalix-tomcat restart | ||

| + | ==== Disabling Scalix Auto-Start ==== | ||

| + | The Scalix services needs to be excluded from starting when the system boots before they can be integrated into the cluster. First, shutdown Scalix manually. To do this, execute the following commands on '''all''' nodes: | ||

| + | [root@node ~]# service scalix-tomcat stop | ||

| + | [root@node ~]# service scalix-postgres stop | ||

| + | [root@node ~]# service scalix stop | ||

| + | Remove the Scalix services from the system auto-start configuration. Again, this must be done on all nodes: | ||

| + | [root@node ~]# chkconfig --del scalix | ||

| + | [root@node ~]# chkconfig --del scalix-tomcat | ||

| + | [root@node ~]# chkconfig --del scalix-postgres | ||

| + | ==== Registering all instances on all nodes ==== | ||

| + | Each instance is only registered on the node where it was created. For clustered operations, all instances need to be registered on all nodes. Instance registration information is kept in the /etc/opt/scalix/instance.cfg config file. You need to merge the contents of these files and install the combined file on all nodes. At the same time, you should also disable instance autostart in the registration file. In the example, the file should look like this on all nodes: | ||

| − | + | OMNAME=mail1 | |

| − | * | + | OMHOSTNAME=mail1.scalixdemo.com |

| − | + | OMDATADIR=/var/opt/scalix/m1/s | |

| − | * | + | OMAUTOSTART=FALSE |

| + | # | ||

| + | OMNAME=mail2 | ||

| + | OMHOSTNAME=mail2.scalixdemo.com | ||

| + | OMDATADIR=/var/opt/scalix/m2/s | ||

| + | OMAUTOSTART=FALSE | ||

| + | ==== Setting up Apache integration on all nodes ==== | ||

| + | ===== Registering the Rules Wizard with the virtual host ===== | ||

| + | For the web-based Scalix Rules Wizard to be available, symbolic links must be created in the Apache config directories. Execute the following commands: | ||

| + | * '''On Node A:''' | ||

| + | [root@nodea ~]# cd /etc/opt/scalix-tomcat/connector | ||

| + | [root@nodea connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf jk/app-mail1.srw.conf | ||

| + | [root@nodea connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf ajp/app-mail1.srw.conf | ||

| + | * '''On Node B:''' | ||

| + | [root@nodeb ~]# cd /etc/opt/scalix-tomcat/connector | ||

| + | [root@nodeb connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf jk/app-mail2.srw.conf | ||

| + | [root@nodeb connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf ajp/app-mail2.srw.conf | ||

| − | + | ===== Registering all workers for mod_jk ===== | |

| + | All workers for Apache mod_jk.so must be registered in /etc/opt/scalix-tomcat/connector/jk/workers.conf. In the example, the file should look like this on all nodes: | ||

| + | JkWorkerProperty worker.list=mail1,mail2 | ||

| − | + | ===== Copying the Apache Tomcat connector config files ===== | |

| + | All files in /etc/opt/scalix-tomcat/connector/ajp and /etc/opt/scalix-tomcat/connector/jk must now be copied between all nodes so that those directories have the same contents on all nodes in the cluster. In the example, the directories must contain these files on both nodes: | ||

| − | + | [root@nodea connector]# ls -R * | |

| − | + | ajp: | |

| − | + | app-mail1.api.conf app-mail1.srw.conf app-mail2.srw.conf | |

| + | app-mail1.caa.conf app-mail1.webmail.conf app-mail2.webmail.conf | ||

| + | app-mail1.m.conf app-mail2.api.conf instance-mail1.conf | ||

| + | app-mail1.res.conf app-mail2.m.conf instance-mail2.conf | ||

| + | app-mail1.sac.conf app-mail2.res.conf | ||

| + | app-mail1.sis.conf app-mail2.sis.conf | ||

| + | |||

| + | jk: | ||

| + | app-mail1.api.conf app-mail1.srw.conf app-mail2.webmail.conf | ||

| + | app-mail1.caa.conf app-mail1.webmail.conf instance-mail1.conf | ||

| + | app-mail1.m.conf app-mail2.api.conf instance-mail2.conf | ||

| + | app-mail1.res.conf app-mail2.m.conf workers.conf | ||

| + | app-mail1.sac.conf app-mail2.res.conf | ||

| + | app-mail1.sis.conf app-mail2.sis.conf | ||

| − | + | ===== Restarting Apache ===== | |

| − | + | After applying all apache configuration changes, Apache should be restarted on both nodes: | |

| + | service httpd restart | ||

| − | + | == Scalix Cluster Integration == | |

| − | + | === RedHat Cluster Suite (RHCS) === | |

| + | t.b.d. | ||

| − | + | == Upgrading Scalix in the Cluster == | |

| − | + | For upgrade, the cluster should be in a healthy state, i.e. each node should be running one instance. While on the node, just follow the instructions to upgrade Scalix as per the install guide, however, specify instance and hostname on the installer command line, e.g.: | |

| − | + | ./scalix-installer --instance=mail --hostname=mai1.scalixdemo.com | |

| − | + | After performing the upgrade on a node hosting an instance which is not running the Management server, you will again need to disable SAC from running on this instance: | |

| − | + | * De-register the Management Console and Server from the node: | |

| − | + | [root@nodeb ~]# /opt/scalix-tomcat/bin/sxtomcat-webapps --del mail2 caa sac | |

| − | + | * Restart Tomcat | |

| − | + | [root@nodeb ~]# service scalix-tomcat restart mail2 | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

Latest revision as of 06:31, 5 May 2007

TB -> TB-2007-02-HACLUSTER

Contents

- 1 Overview

- 2 Prerequisites

- 3 Cluster Software Setup

- 4 Scalix Setup

- 5 Scalix Cluster Integration

- 6 Upgrading Scalix in the Cluster

Overview

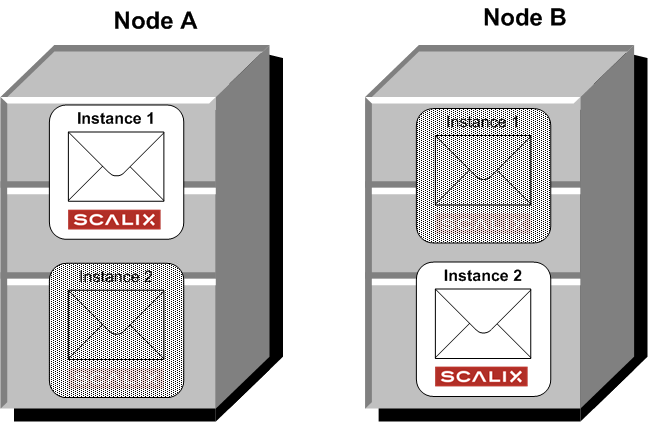

The most common high-availability setup is dual nodes in a clustered configuration, each running an active instance of Scalix and setup for mutual failover. With both nodes up and running, instance "mail1" runs on Node A while Instance "mail2" runs on Node B.

In case of failure of either node, the active instance on that node fails over to the other node so that now the one remaining node is running both instances. From a user perspective, this happens in a transparent way because each instance is associated with a virtual hostname (instance hostname or service hostname) and IP address through which all user interaction takes place.

The Scalix message store and database is kept on external shared storage. Physically, the storage can be accessed by both nodes at all times. However, each instance has it's separate message store, so the cluster software manages access to the storage in such a way that each message store is only accessed by the node that is running the associated instance.

Various shared storage technologies such as multi-initiator SCSI or a SAN based on iSCSI or FibreChannel can be used. Scalix does not support the use of NFS for shared storage.

Scalix Enterprise Edition software provides multi-instance and virtual hostname support. The actual cluster monitoring and failover as well as access to the storage and network address and hostname management is provided by clustering software. We have tested HA-Scalix to run with RedHat Cluster Suite, however it should be possible to create similar configurations with most other cluster products.

Prerequisites

Software Versions

- This document assumes you are running Scalix 11.0.4 or later on SuSE Linux Enterprise Server 10 or on RedHat Enterprise Linux 4

Network Address and Hostname Layout

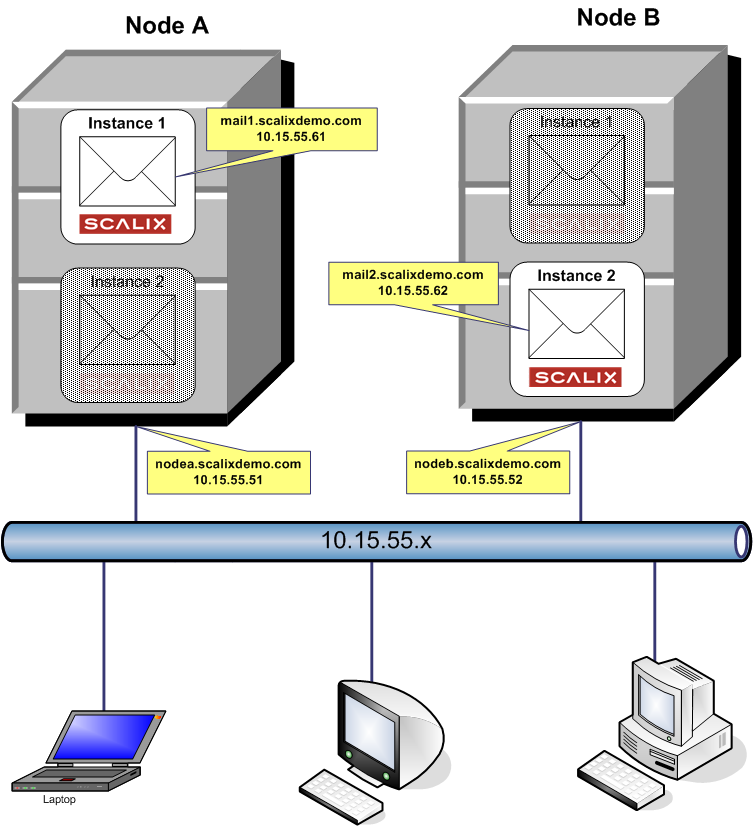

Each of the nodes has one static IP address for itself. This is only used for admin purposes, it stays with the node, never fails over and is not used to access Scalix services.

In the example, we use the following IP addresses and hostnames:

Node A Node B nodea.scalix.demo nodeb.scalix.demo 192.168.100.11 192.168.100.12

Each of the instances also has an associated IP address. This is used by end user access. It moves between the nodes with the instance. In the example, we use the following IP addresses and hostnames:

Instance 1 Instance 2 mail1.scalix.demo mail2.scalix.demo 192.168.100.21 192.168.100.22

All IP addresses and hostname should be registered in DNS so that they can be resolved forward and reverse. Also, on the cluster nodes, the IP address mappings should be recorded in the /etc/hosts config file so that the cluster does not depend on DNS availability.

Storage Configuration

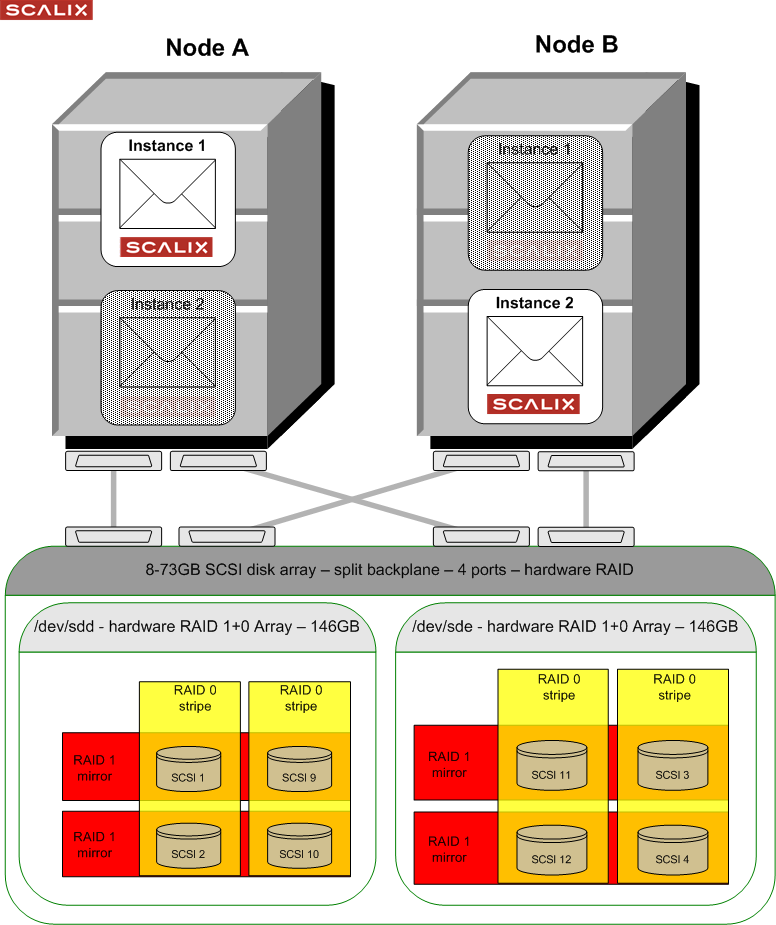

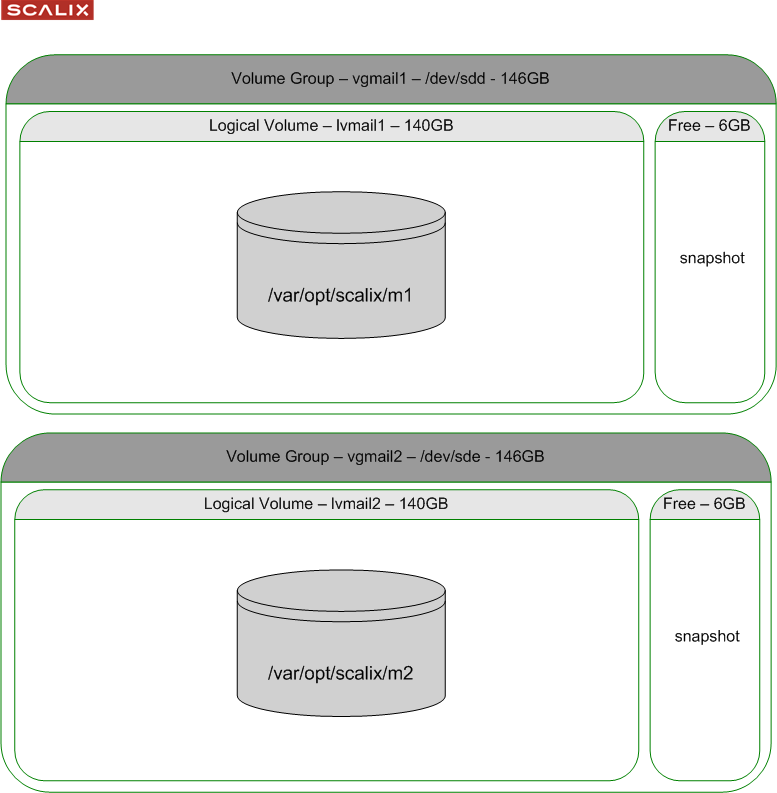

Each of the instances needs it's own dedicated storage area. Scalix recommends the use of LVM as this enables filesystem resizing as well as the ability to snapshot a filesystem for backup purposes. From an LVM point of view, each instance should have it's own LVM Volume Group (VG) as this VG can only be activated and accessed by one of the hosts at a time. For the example configuration, we will use a simple disk configuration as follows:

We take two disks or LUNs on the shared storage for each instance, delete any partitions currently on the disks, then create a volume group and a logical volume for the message store and Scalix data area. Also you will need to initialize the filesystem on the logical volume:

- On Node A:

[root@nodea ~]# dd if=/dev/zero of=/dev/sdd bs=512 count=1 1+0 records in 1+0 records out [root@nodea ~]# pvcreate /dev/sdd Physical volume "/dev/sdd" successfully created [root@nodea ~]# vgcreate vgmail1 /dev/sdd Volume group "vgmail1" successfully created [root@nodea ~]# lvcreate -L 140G -n lvmail1 vgmail1 Logical volume "lvmail1" created [root@nodea ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "vgmail1" using metadata type lvm2 Found volume group "VolGroup00" using metadata type lvm2 [root@nodea ~]# mkfs.ext3 /dev/vgmail1/lvmail1 mke2fs 1.35 (28-Feb-2004) ... Writing superblocks and filesystem accounting information: done [root@nodea ~]# vgchange -a y vgmail1 1 logical volume(s) in volume group "vgmail1" now active [root@nodea ~]# vgchange -c y vgmail1 Volume group "vgmail1" successfully changed

- On Node B:

[root@nodeb ~]# dd if=/dev/zero of=/dev/sde bs=512 count=1 1+0 records in 1+0 records out [root@nodeb ~]# pvcreate /dev/sde Physical volume "/dev/sde" successfully created [root@nodeb ~]# vgcreate vgmail2 /dev/sde Volume group "vgmail2" successfully created [root@nodeb ~]# lvcreate -L 140G -n lvmail2 vgmail2 Logical volume "lvmail2" created [root@nodea ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "vgmail2" using metadata type lvm2 Found volume group "VolGroup00" using metadata type lvm2 [root@nodeb ~]# mkfs.ext3 /dev/vgmail2/lvmail2 mke2fs 1.35 (28-Feb-2004) ... Writing superblocks and filesystem accounting information: done [root@nodea ~]# vgchange -a y vgmail1 1 logical volume(s) in volume group "vgmail1" now active [root@nodea ~]# vgchange -a y vgmail2 1 logical volume(s) in volume group "vgmail2" now active [root@nodea ~]# vgchange -c y vgmail1 Volume group "vgmail1" successfully changed [root@nodea ~]# vgchange -c y vgmail2 Volume group "vgmail2" successfully changed

- On Node A:

[root@nodea ~]# vgchange -a y vgmail2 1 logical volume(s) in volume group "vgmail2" now active [root@nodea ~]# vgchange -c y vgmail2 Volume group "vgmail2" successfully changed

Next, you need to create the mountpoints. For this, you need to determine your instance's root directory. The pathname for this will be

/var/opt/scalix/az

where az is the first and the last letter of your instance name, e.g. if your instances are named mail1 and mail2, your mountpoints will be

/var/opt/scalix/m1

and

/var/opt/scalix/m2

respectively.

Create those mountpoints on both nodes:

- On Node A:

[root@nodea init.d]# mkdir -p /var/opt/scalix/m1 [root@nodea init.d]# mkdir -p /var/opt/scalix/m2

- On Node B:

[root@nodeb init.d]# mkdir -p /var/opt/scalix/m1 [root@nodeb init.d]# mkdir -p /var/opt/scalix/m2

Cluster Software Setup

RedHat Cluster Suite (RHCS)

Installation

Refer to RedHat documentation for Hardware Prerequisites and basic cluster software installation. Watch out for the following items:

- Install the cluster software on both nodes.

- Run system-config-cluster on the first node, after performing the basic configuration, copy the cluster.conf file to the other node (as described in the cluster documentation).

- You can use the default Distributed Lock Manager (DLM). You don't need the Global Lock Manager.

- If you have more than one cluster on the same subnet, make sure to modify the default cluster name "alpha_cluster". This can be found in the cluster.conf file.

Creating the Scalix service

The service that represents the Scalix instance to the cluster should be created before starting the Scalix installation. This will provide the virtual IP address and shared storage mountpoint so that Scalix can be installed using this. To create the Scalix service, follow these steps:

1. Make sure the cluster software is started on both nodes:

service ccds start; service cman start; service rgmanager start; service fenced start

2. Using system-config-cluster, add the services for both mail1 and mail2:

- Use the instance name,"mail1" as the service name

- Uncheck "Autostart this Service" and set recovery policy to "Disable"

- Create a new resource for the service to represent the virtual IP address defined for the instance

- Create a new resource for the service to represent the filesystem and mountpoint:

Name: mail1fs File System Type: ext3 Mountpoint: /var/opt/scalix/m1 Device: /dev/vgmail1/lvmail1 Options: File System ID: Force Unmount: Checked Reboot host node: Checked Check file system: Checked

3. Bring up the services on the respective primary node:

clusvcadm -e mail1 -m nodea.scalixdemo.com clusvcadm -e mail2 -m nodeb.scalixdemo.com

4. At this point, it's good to check if the IP address and filesystem resources are available.

- On Node A:

[root@nodea ~]# clustat Member Status: Quorate

Member Name Status ------ ---- ------ nodea.scalixdemo.com Online, Local, rgmanager nodeb.scalixdemo.com Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

mail1 (nodea.scalixdemo.com) disabled

mail2 (nodeb.scalixdemo.com) disabled

[root@nodea ~]# mount

...

/dev/mapper/vgmail1-lvmail1 on /var/opt/scalix/m1 type ext3 (rw)

[root@nodea ~]# ip addr show

...

2: eth0: <BROADCAST,MULTICAST,UP> mtu 1500 qdisc pfifo_fast qlen 1000

link/ether 00:06:5b:04:f4:25 brd ff:ff:ff:ff:ff:ff

inet 169.254.0.51/24 brd 169.254.0.255 scope global eth0

inet 169.254.0.61/32 scope global eth0

inet6 fe80::206:5bff:fe04:f425/64 scope link

valid_lft forever preferred_lft forever

Scalix Setup

Installation

You can now install Scalix. Follow the steps documented in the Scalix Installation Guide, with the follwing change:

- When starting the installer, specify both the instance name and the fully-qualified hostname on the command line:

- On Node A:

[root@nodea ~]# ./scalix-installer --instance=mail1 --hostname=mail1.scalixdemo.com

- Select 'Typical install'

- On Node B:

[root@nodeb ~]# ./scalix-installer --instance=mail2 --hostname=mail2.scalixdemo.com

- Select 'Typical install'

- When asked for the 'Secure Communications' password, make sure to use the same password on both nodes.

Post-Install Tasks

Setting up Scalix Management Services

One of the instances needs to be nominated as the Admin instance ("Ubermanager"). The other instances will be managed through this. In this example, we assume that "mail1" will be the admin instance. For the instances not running the admin server, follow these steps:

- De-register the Management Console and Server from the node:

[root@nodeb ~]# /opt/scalix-tomcat/bin/sxtomcat-webapps --del mail2 caa sac

- Reconfigure the Management Agent to report to the Admin instance:

[root@nodeb ~]# ./scalix-installer --instance=mail2 --hostname=mail2.scalixdemo.com

- Select "Reconfigure Scalix Components"

- Select "Scalix Management Agent"

- Enter the fully-qualified virtual hostname of the Admin instance when asked for the host where Management Services are installed.

- Re-enter the 'Secure Communitcations' password. Make sure you use the same value as above.

- Don't opt to Create Admin Groups

Setting the Tomcat shutdown port

Each instance across the cluster needs to have a cluster-wide unique Tomcat shutdown port number. By default, all instances are setup to use 8005. On all but one instance, this has to be modified. Use the following steps:

- Set a unique shutdown port number with the following command:

[root@nodeb ~]# /opt/scalix-tomcat/bin/sxtomcat-modify-instance -p 8006 mail2

- Restart Tomcat

[root@nodeb ~]# service scalix-tomcat restart

Disabling Scalix Auto-Start

The Scalix services needs to be excluded from starting when the system boots before they can be integrated into the cluster. First, shutdown Scalix manually. To do this, execute the following commands on all nodes:

[root@node ~]# service scalix-tomcat stop [root@node ~]# service scalix-postgres stop [root@node ~]# service scalix stop

Remove the Scalix services from the system auto-start configuration. Again, this must be done on all nodes:

[root@node ~]# chkconfig --del scalix [root@node ~]# chkconfig --del scalix-tomcat [root@node ~]# chkconfig --del scalix-postgres

Registering all instances on all nodes

Each instance is only registered on the node where it was created. For clustered operations, all instances need to be registered on all nodes. Instance registration information is kept in the /etc/opt/scalix/instance.cfg config file. You need to merge the contents of these files and install the combined file on all nodes. At the same time, you should also disable instance autostart in the registration file. In the example, the file should look like this on all nodes:

OMNAME=mail1 OMHOSTNAME=mail1.scalixdemo.com OMDATADIR=/var/opt/scalix/m1/s OMAUTOSTART=FALSE # OMNAME=mail2 OMHOSTNAME=mail2.scalixdemo.com OMDATADIR=/var/opt/scalix/m2/s OMAUTOSTART=FALSE

Setting up Apache integration on all nodes

Registering the Rules Wizard with the virtual host

For the web-based Scalix Rules Wizard to be available, symbolic links must be created in the Apache config directories. Execute the following commands:

- On Node A:

[root@nodea ~]# cd /etc/opt/scalix-tomcat/connector [root@nodea connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf jk/app-mail1.srw.conf [root@nodea connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf ajp/app-mail1.srw.conf

- On Node B:

[root@nodeb ~]# cd /etc/opt/scalix-tomcat/connector [root@nodeb connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf jk/app-mail2.srw.conf [root@nodeb connector]# cp /opt/scalix/global/httpd/scalix-web-client.conf ajp/app-mail2.srw.conf

Registering all workers for mod_jk

All workers for Apache mod_jk.so must be registered in /etc/opt/scalix-tomcat/connector/jk/workers.conf. In the example, the file should look like this on all nodes:

JkWorkerProperty worker.list=mail1,mail2

Copying the Apache Tomcat connector config files

All files in /etc/opt/scalix-tomcat/connector/ajp and /etc/opt/scalix-tomcat/connector/jk must now be copied between all nodes so that those directories have the same contents on all nodes in the cluster. In the example, the directories must contain these files on both nodes:

[root@nodea connector]# ls -R * ajp: app-mail1.api.conf app-mail1.srw.conf app-mail2.srw.conf app-mail1.caa.conf app-mail1.webmail.conf app-mail2.webmail.conf app-mail1.m.conf app-mail2.api.conf instance-mail1.conf app-mail1.res.conf app-mail2.m.conf instance-mail2.conf app-mail1.sac.conf app-mail2.res.conf app-mail1.sis.conf app-mail2.sis.conf jk: app-mail1.api.conf app-mail1.srw.conf app-mail2.webmail.conf app-mail1.caa.conf app-mail1.webmail.conf instance-mail1.conf app-mail1.m.conf app-mail2.api.conf instance-mail2.conf app-mail1.res.conf app-mail2.m.conf workers.conf app-mail1.sac.conf app-mail2.res.conf app-mail1.sis.conf app-mail2.sis.conf

Restarting Apache

After applying all apache configuration changes, Apache should be restarted on both nodes:

service httpd restart

Scalix Cluster Integration

RedHat Cluster Suite (RHCS)

t.b.d.

Upgrading Scalix in the Cluster

For upgrade, the cluster should be in a healthy state, i.e. each node should be running one instance. While on the node, just follow the instructions to upgrade Scalix as per the install guide, however, specify instance and hostname on the installer command line, e.g.:

./scalix-installer --instance=mail --hostname=mai1.scalixdemo.com

After performing the upgrade on a node hosting an instance which is not running the Management server, you will again need to disable SAC from running on this instance:

- De-register the Management Console and Server from the node:

[root@nodeb ~]# /opt/scalix-tomcat/bin/sxtomcat-webapps --del mail2 caa sac

- Restart Tomcat

[root@nodeb ~]# service scalix-tomcat restart mail2