TB/TB-2007-02-HACLUSTER

NOTE: This document is under construction. It also relies on some changes in the Scalix Installer that are not yet released as of 2007-04-13 - which is when we started working on this. Stay tuned, but don't trust this (yet!). Florian

Contents

Overview

The most common high-availability setup is dual nodes in a clustered configuration, each running an active instance of Scalix and setup for mutual failover. With both nodes up and running, instance "mail1" runs on Node A while Instance "mail2" runs on Node B.

In case of failure of either node, the active instance on that node fails over to the other node so that now the one remaining node is running both instances. From a user perspective, this happens in a transparent way because each instance is associated with a virtual hostname (instance hostname or service hostname) and IP address through which all user interaction takes place.

The Scalix message store and database is kept on external shared storage. Physically, the storage can be accessed by both nodes at all times. However, each instance has it's separate message store, so the cluster software manages access to the storage in such a way that each message store is only accessed by the node that is running the associated instance.

Various shared storage technologies such as multi-initiator SCSI or a SAN based on iSCSI or FibreChannel can be used. Scalix does not support the use of NFS for shared storage.

Scalix Enterprise Edition software provides multi-instance and virtual hostname support. The actual cluster monitoring and failover as well as access to the storage and network address and hostname management is provided by clustering software. We have tested HA-Scalix to run with RedHat Cluster Suite, however it should be possible to create similar configurations with most other cluster products.

Prerequisites

Network Address and Hostname Layout

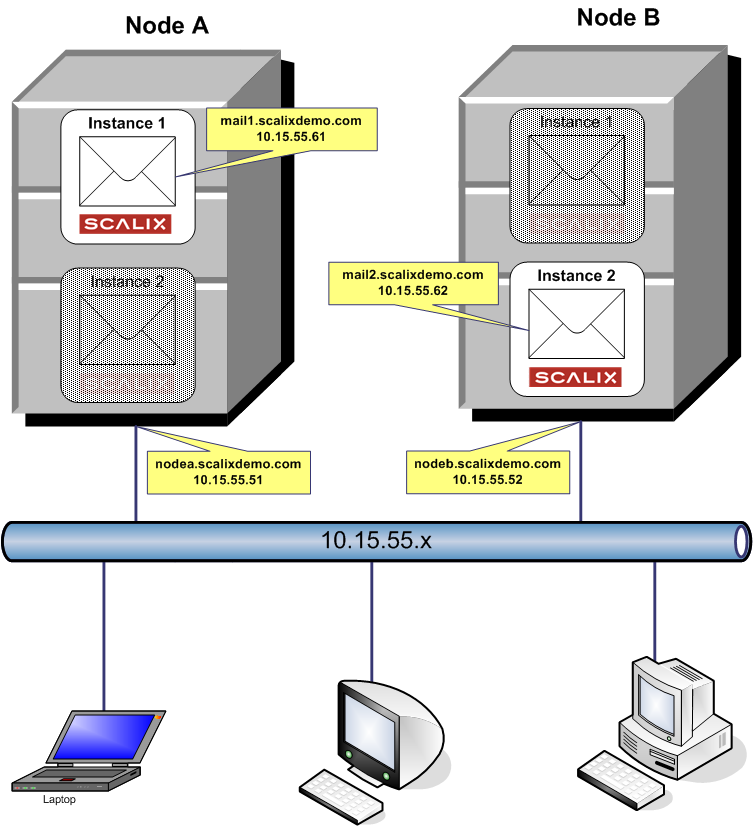

Each of the nodes has one static IP address for itself. This is only used for admin purposes, it stays with the node, never fails over and is not used to access Scalix services.

In the example, we use the following IP addresses and hostnames:

Node A Node B nodea.scalix.demo nodeb.scalix.demo 192.168.100.11 192.168.100.12

Each of the instances also has an associated IP address. This is used by end user access. It moves between the nodes with the instance. In the example, we use the following IP addresses and hostnames:

Instance 1 Instance 2 mail1.scalix.demo mail2.scalix.demo 192.168.100.21 192.168.100.22

All IP addresses and hostname should be registered in DNS so that they can be resolved forward and reverse. Also, on the cluster nodes, the IP address mappings should be recorded in the /etc/hosts config file so that the cluster does not depend on DNS availability.

Storage Configuration

Each of the instances needs it's own dedicated storage area. Scalix recommends the use of LVM as this enables filesystem resizing as well as the ability to snapshot a filesystem for backup purposes. From an LVM point of view, each instance should have it's own LVM Volume Group (VG) as this VG can only be activated and accessed by one of the hosts at a time. For the example configuration, we will use a simple disk configuration as follows:

TODO: PICTURE OF DISK CONFIGURATION

We take two disks or LUNs on the shared storage for each instance, delete any partitions currently on the disks, then create a volume group and a logical volume for the message store and Scalix data area. Also you will need to initialize the filesystem on the logical volume:

[root@nodea -]# dd if=/dev/zero of=/dev/sdc bs=512 count=1 1+0 records in 1+0 records out [root@nodea -]# dd if=/dev/zero of=/dev/sdd bs=512 count=1 1+0 records in 1+0 records out [root@nodea ~]# pvcreate /dev/sdc Physical volume "/dev/sdc" successfully created [root@nodea ~]# pvcreate /dev/sde Physical volume "/dev/sdh" successfully created [root@nodea ~]# vgcreate vgmail1 /dev/sdd /dev/sdh Volume group "vgmail1" successfully created [root@nodea ~]# lvcreate -L 300G -n lvmail1 vgmail1 Logical volume "lvmail1" created [root@nodea ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "vgmail2" using metadata type lvm2 Found volume group "vgmail1" using metadata type lvm2 Found volume group "VolGroup00" using metadata type lvm2 [root@nodea ~]# mkfs.ext3 /dev/vgmail1/lvmail1 mke2fs 1.35 (28-Feb-2004) ... Writing superblocks and filesystem accounting information: done [root@nodea init.d]# vgchange -a y vgmail1 1 logical volume(s) in volume group "vgmail1" now active [root@nodea init.d]# vgchange -a y vgmail2 1 logical volume(s) in volume group "vgmail2" now active [root@nodea init.d]# vgchange -c y vgmail1 Volume group "vgmail1" successfully changed [root@nodea init.d]# vgchange -c y vgmail2 Volume group "vgmail2" successfully changed

[root@nodeb -]# dd if=/dev/zero of=/dev/sde bs=512 count=1 1+0 records in 1+0 records out [root@nodeb -]# dd if=/dev/zero of=/dev/sdf bs=512 count=1 1+0 records in 1+0 records out [root@nodeb ~]# pvcreate /dev/sde Physical volume "/dev/sde" successfully created [root@nodeb ~]# pvcreate /dev/sdf Physical volume "/dev/sdf" successfully created [root@nodeb ~]# vgcreate vgmail2 /dev/sde /dev/sdf Volume group "vgmail2" successfully created [root@nodeb ~]# lvcreate -L 300G -n lvmail2 vgmail1 Logical volume "lvmail2" created [root@nodea ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "vgmail2" using metadata type lvm2 Found volume group "vgmail1" using metadata type lvm2 Found volume group "VolGroup00" using metadata type lvm2 [root@nodeb ~]# mkfs.ext3 /dev/vgmail2/lvmail2 mke2fs 1.35 (28-Feb-2004) ... Writing superblocks and filesystem accounting information: done [root@nodea init.d]# vgchange -a y vgmail1 1 logical volume(s) in volume group "vgmail1" now active [root@nodea init.d]# vgchange -a y vgmail2 1 logical volume(s) in volume group "vgmail2" now active [root@nodea init.d]# vgchange -c y vgmail1 Volume group "vgmail1" successfully changed [root@nodea init.d]# vgchange -c y vgmail2 Volume group "vgmail2" successfully changed

Cluster Software Setup

RedHat Cluster Suite (RHCS)

Installation

Refer to RedHat documentation for Hardware Prerequisites and basic cluster software installation. Watch out for the following items:

- Install the cluster software on both nodes.

- Run system-config-cluster on the first node, after performing the basic configuration, copy the cluster.conf file to the other node (as described in the cluster documentation).

- You can use the default Distributed Lock Manager (DLM). You don't need the Global Lock Manager.

- If you have more than one cluster on the same subnet, make sure to modify the default cluster name "alpha_cluster". This can be found in the cluster.conf file.

Creating the Scalix service

The service that represents the Scalix instance to the cluster should be created before starting the Scalix installation. This will provide the virtual IP address and shared storage mountpoint so that Scalix can be installed using this. To create the Scalix service, follow these steps: 1. Make sure the cluster software is started on both nodes:

service ccds start; service cman start; service rgmanager start; service fenced start

2. Using system-config-cluster, add the service(s):

- Use the instance name, e.g. "mail1" as the service name

- Uncheck "Autostart this Service" and set recovery policy to "Disable"

- Create a new resource for this service to represent the virtual IP address defined for the instance

3. Bring up the services on the respective primary node:

clusvcadm -e mail1 -m nodea.scalixdemo.com clusvcadm -e mail2 -m nodeb.scalixdemo.com

OLD STUFF BELOW

- Install Scalix on both servers. One should have the Management Console installed and the other should not. For more on how to install without the Management Console, see the Scalix Installation Guide section about custom installations.

- Disable automatic startup of services.

- Change the name of the server back to the name of the physical server, still retaining the physical server’s IP address.

- Install and configure the cluster software, which recognizes the physical servers as real servers, and the virtual instances as services.

- Fix the instance.conf file

Sample Setup Instructions

The instructions outlined below are provided as an example only. They are specific to RedHat. If you have a different operating system, use them simply as guidelines.

Perform all of these steps on both servers.

To set up a dual-server failover cluster for Scalix:

1. Build the two servers as if they are simply two servers within the same organization. When installing the operating system, use the virtual hostname and IP address.

2. Using the operating system’s DNS configuration process, add the virtual instances to the DNS just as you would a physical server. To add the virtual instance, launch the RedHat Network Configuration window to change the name of the server from the name of the physical machine to the name of the virtual instance.

# system-config-network

In the window that launches, select the DNS tab and enter the name of the virtual instance, then save and exit the Network Configuration window. For other operating systems, use KDE or the GNOME network setting GUI.

3. Back at the command line, edit the /etc/hosts file to enter the IP address of the physical server.

# vi etc/hosts # ifconfig

Type in the IP address of the physical server, followed by the virtual domain name and the virtual name. For example:

10.17.120.52 virtual1.scalixdemo.com virtual1

Save the new information and exit vi.

4. Run a check to ensure that the physical server is functioning with the virtual IP address.

# ifconfig

5. Install Scalix on both servers. One should have the Management Console installed and the other should not. For more on how to install without the Management Console, see the Scalix Installation Guide section about custom installations. Before moving on, check that Scalix is up and running.

# omstat -s

6. Disable automatic startup of services. This requires a stop first.

To stop the services:

# service ccsd stop

# service rgmanager stop

# service cman stop

# service fenced stop

To disable Scalix from restarting upon reboot:

# chkconfig -level 35 scalix off

# chkconfig -level 35 scalix-tomcat off

# chkconfig -level 35 scalix-postgres off

Alert: If you do not complete this step, you may corrupt your installation.

7. Reboot the server.

shutdown -r now

8. Verify that the physical server is now offline and the virtual service is up and running.

# clustat

9. Rename the server back to the name of the physical server, still retaining the physical server’s IP address. For instructions, see steps 2 and 3 above. In this case, you remove the entry in the etc/hosts file that you created in step 3, then save and exit vi.

# vi /etc/hosts

10. Reboot the server again.

# shutdown -r now

11. Restart the cluster.

# service ccsd start # service rgmanager start # service cman start # service fenced start

12. Run another check to ensure that the physical server once again is functioning with the physical IP address.

# ifconfig

13. Install and configure the cluster software, which recognizes the physical servers as real servers, and the virtual instances as services. Configure the cluster software to include the two physical machines, and then configure the virtual instance of Scalix with your cluster configuration software program. This typically consists of:

- An IP address

- A shared file system

- A script

14. On both machines, define the virtual instances to Scalix by editing the /etc/opt/scalix/<instance.cfg> and changing the omname to the actual virtual machine name, then changing the value of autostart to FALSE.

15. Relocate the information store to a shared directory so that it can switch back and forth between the two clustered servers.

# cd ~/mail1 (or mail2) # mkdir temp # mv /mail1* ./temp (or mail2)

16. Relocate the virtual instance to this machine.

# clusvcadm -r <virtual hostname> -n <physical domain name>

17. Move everything from temp to the shared disk.

# mv ./temp/* ./mail1

18. Edit the file /opt/scalix/global/config to change the omautostart value from true to false.

# vi /opt/scalix/global/config

19. Restart the virtual instance.

# service scalix start <virtual server hostname>

20. Telnet into the virtual instance to make sure it’s running

# telnet <virtual server hostname> <port>

21. Stop Scalix.

service scalix stop <hostname>

22. Copy the configuration files from each machine to the other so that both have the exact same files.

cd /etc/opt/scalix-tomcat/connector/jk scp *-<hostname 1>.* <hostname 2>:/etc/opt/sclaix-tomcat/connector/jk

Then repeat, reversing the hostnames.

scp mail1:/etc/opt/scalix-tomcat/connector/jk/*-virtual1.*

23. Edit the file workers.conf to include both mailnodes of the virtual hosts. Do this on both physical hosts.

cd /etc/opt/scalix-tomcat/connector/jk/workers.conf vi workers.conf

24. Go to the following directory to make the shutdown port 8006.

cd /var/opt/scalix/<nn>/tomcat/conf/server.xml vi server.xml

Look for the following value:

Server port =”8005” shutdown=”SHUTDOWN”

Change the port number from 8005 to 8006. While there, also change the short name of the instance to the fully qualified domain name in the three separate places that it appears.

25. Repeat the entire sequence on the other virtual machine.

26. Change the server names in the following file to the virtual machine names.

cd /etc/httpd/conf.d vi scalix-web-client.conf

At the bottom of the file, notice that the diretory refers to the physical directory instead of the virtual. You’re going to move these lines to one of the tomcat.conf files.

Select the bottom seven lines and copy then delete, them.

Alias /omhtml/ /var/opt/scalix/n1/s/omhtml/ <Directory "/var/opt/scalix/n1/s/omhtml">

AllowOverride None

Order allow,deny

Allow from all

AddDefaultCharset off

</Directory>

27. Open the etc/opt/scalix-tomcat/connector/jk/instance-name1.conf

cd /etc/opt/scalix-tomcat/connector/jk/app-virtual1.*.conf

Paste these seven lines in, beginning with the third line. The first nine lines of the modified instance-name1.conf file should look as follows.

<VirtualHost virtual1.scalixdemo.com:80>

Include /etc/opt/scalix-tomcat/connector/jk/app-virtual1.*.conf

Alias /omhtml/ /var/opt/scalix/n1/s/omhtml/ <Directory "/var/opt/scalix/n1/s/omhtml">

AllowOverride None

Order allow,deny

Allow from all

AddDefaultCharset off

</Directory>

Perform this step on both physcial hosts.